Aurora Lens, AR Glasses for Autism Spectrum Disorder Adults

Role

UX designer

Timeline

October - November 2025

Skills

UX strategy, Wireframing, Interactive prototyping, Cross-functional communication, Presentation

Team Dawnbreaker

June Kim(UX Designer), Silang Wang(PM), Stephanie Orellana(UX Researcher), Swaraj Thamke(Visual UX designer)

Overview

I participated in one of the Human Centered Design & Engineering at University of Washington’s signature events, Design Jam for Accessibility 2025. After a month of intense research, ideation, and prototyping, our team proudly presented Aurora Lens — an AR glasses concept designed to help autistic adults feel more comfortable and less anxious in new public spaces.

Thanks to our amazing teamwork, we conducted 108 survey responses, 3 interviews, 2 co-design sessions, and 1 tabling session, which led us to design an AR glasses with three key features and a connected app to control the features.

Pain point

Sensory overload in public spaces

Autistic adults struggle to navigate or stay calm in busy public spaces because they feel anxious when excessive noise, sudden movements, or lighting conditions intensify their stress.

How might we help autistic adults feel

more comfortable and less anxious in new public spaces?

Multi-method research approach

To understand users’ needs and challenges in public spaces, we conducted mixed-method research using surveys, interviews, co-design, and tabling session activities.

👉 Co-design session : Whiteboard 1, Whiteboard 2

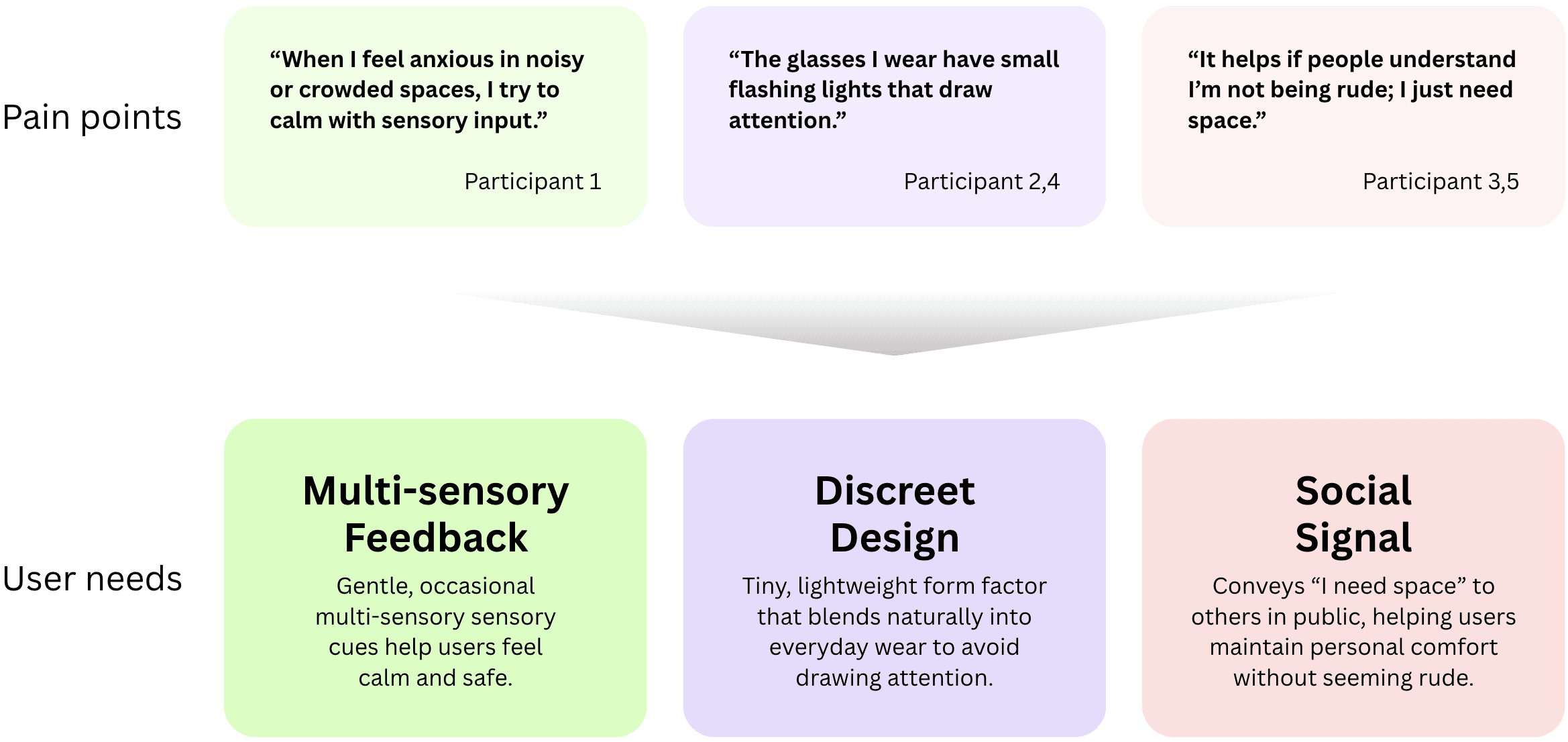

Key insights from user research

Our research revealed that users often feel overwhelmed in noisy or crowded spaces, want discreet wearable designs, and appreciate clear social cues that communicate their need for personal space. These insights shaped three core user needs: gentle multi-sensory feedback, discreet form factors, and subtle social signaling.

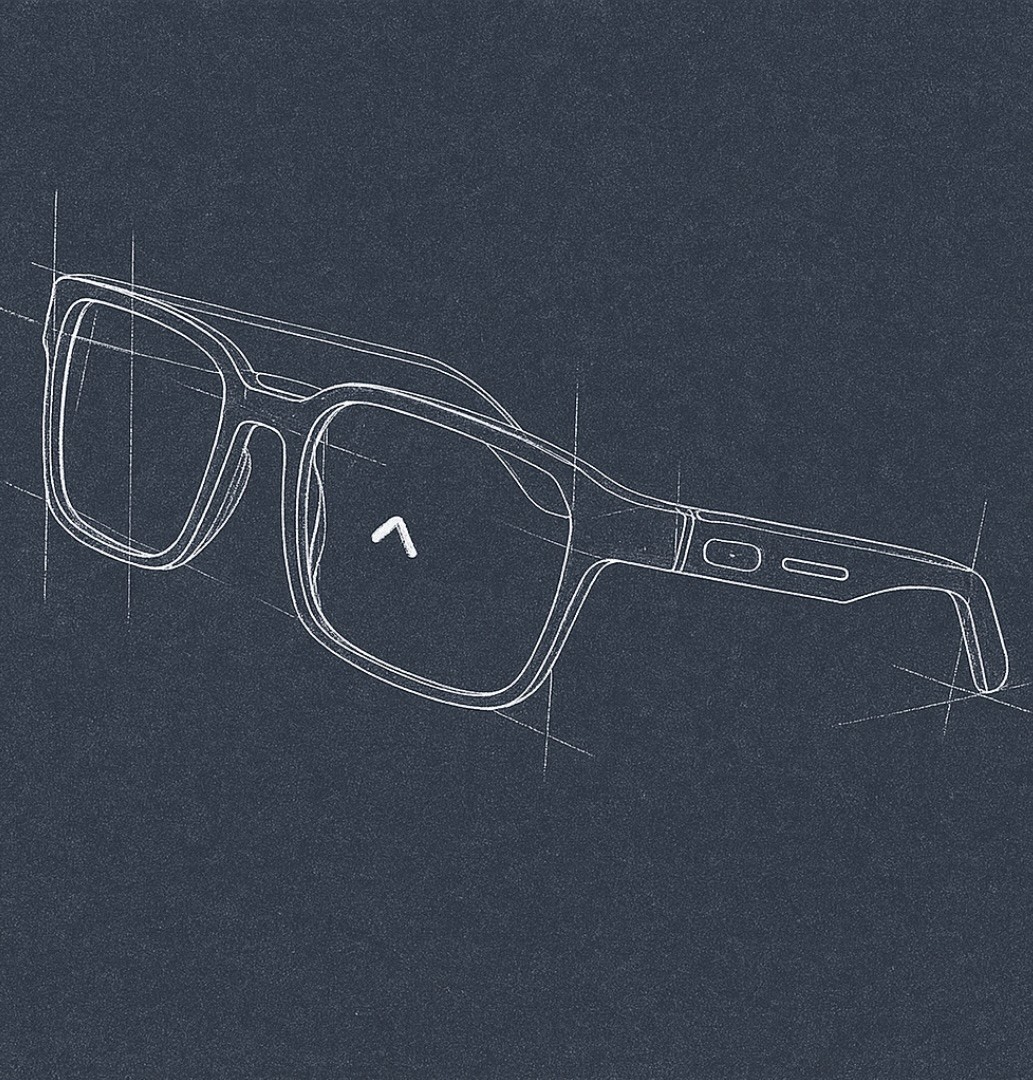

Solution

Sensory-supportive AR glasses

Grounded in user insights, Aurora Lens help autistic adults stay calm and comfortable in overstimulating public environments.

Feature

1

Multi-sensory Navigational Feedback

Provides multi-sensory route guidance (temperature, sound, visuals, vibration) for smooth and comfortable navigation.

Temperature

Vibration/Sound

Visuals

2

Calm Immersive Mode

Offers distraction / mediation in AR/VR, using immersive nature or familiar scenes to reduce anxiety in unfamiliar environments.

3

Low Bucket Mode

Users can adjust lens transparency or polarization to filter unpleasant light and drown out surrounding noises, signaling social withdrawal cues to preserve their mental bandwidth.

?

What is Low Bucket?

“Low bucket” is a term used in the autism community to describe a state of very low energy or reduced sensory capacity.

4

Connected App

Aurora Lens is connected to an app that allows users to control its main features. They can choose between three modes.

Product roadmap

Technical feasibility

Built on Existing AR Tech

Multi-sensory navigational feedback (audio, visual, vibration, and temperature)

Calm mode (Meta Rayban Display, XReal)

Low Bucket mode (transition lens)

Expanding Through Versions

Light version: navigation and low bucket mode

Pro version: immersive and low-bucket modes

Targets both ASD and everyday users

Cost considerations

Affordable, Modular Design

Light version: affordable, entry model for new users, with strong reliability.

Pro version: additional sensory navigational cues + fully immersive display, more work needed in reliabiltiy.

Economies of scale

Fits Within Accessibility Systems

Connect with smartphone accessibility settings

Integrate with public navigation systems like airports or museums for inclusive experiences